A communications platform for people with complete locked-in syndrome

Laying the foundations for future assistive devices

Goal | restoring communication for paralyzed patients

Status | Completed

Timeframe | 2018 – 2024

Area of Research | Bain-computer-interface technology

Partners | HUG, EPFL, UMC Utrecht, TU Graz, CHUV

Lead | Jonas Zimmermann

Evolution | Part of the ABILITY project

A system for people with complete locked-in syndrome that allows consistent and fluent communication

When people are completely paralyzed, and cannot move or speak, can they use their brain signals to communicate?

This project explores the ability of people to communicate following complete paralysis because of amyotrophic lateral sclerosis (ALS). The results will lay the foundations for the development of future assistive devices and methods of communication for people who are completely locked-in.

ALS is a progressive neurodegenerative disorder in which deterioration of the nervous system responsible for voluntary movement eventually leads to paralysis, but in which the cognitive parts of the brain often continue to function normally. ALS is also known as Lou Gehrig’s disease and motor neurone disease (MND).

Some people with ALS progress into a complete locked-in state (CLIS) in which they lose the use of all muscles and survive with artificial ventilation and feeding. In CLIS, people have no way to communicate. When people can no longer speak, but still have some remaining movement ability, they often use assistive communication devices to express themselves through a computer. Such devices include eye trackers, switches that detect muscle activity, and sip and puff devices that measure air pressure in inhaled or exhaled breath. There is evidence that people with ALS can have a high sense of well-being, even in a locked-in state, and so the ability to communicate is important to ensure continued high quality of life and appropriate care.

As part of a single patient case study, the Wyss Center team is working with a participant with ALS, his family, and the University of Utrecht, to determine whether people with advanced ALS, who can no longer use assistive devices to communicate, can voluntarily form words and sentences with the help of an implanted brain-computer interface (BCI) system. “The participant we work with has a particularly fast progressing form of the disease and loss of communication was imminent when he was enrolled. While he was still able to move his eyes to communicate, he expressed his wish to take part in this case study” said Jonas Zimmermann, Wyss Center Senior Neuroscientist.

The technology

An implanted brain-computer interface system including a neural signal decoder and an auditory feedback speller. Two microelectrode arrays, placed on the surface of the user’s brain, detect neural signals. A wired connection sends the neural data to a computer for processing. The Wyss Center’s NeuroKey software decodes the data and runs an auditory feedback speller that prompts the user to select letters to form words and sentences. The user learns how to alter their own brain activity according to the audio feedback they receive.

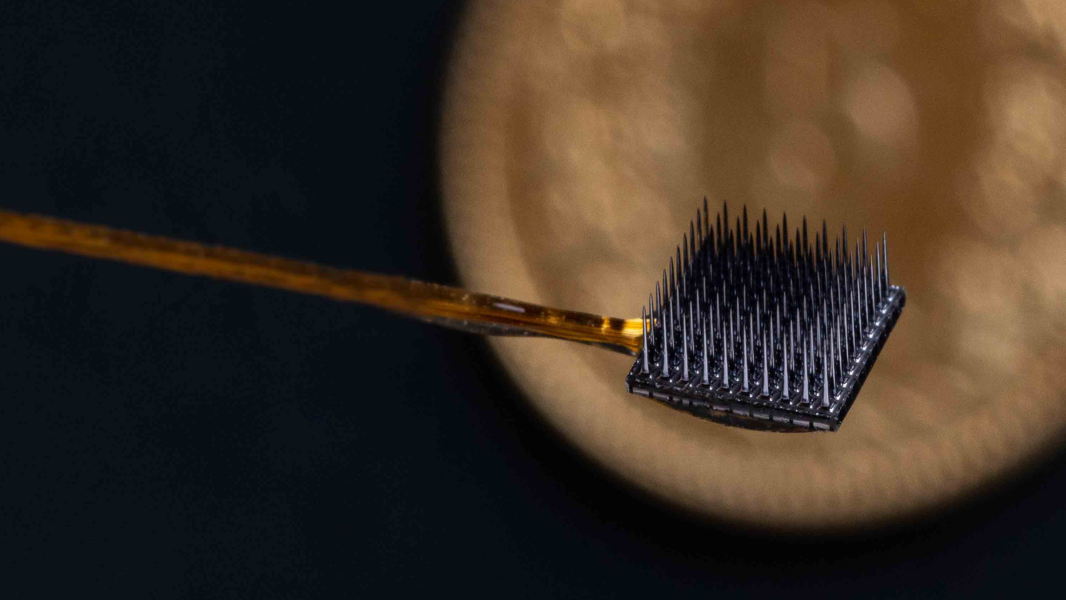

These tiny, 3.2 x 3.2 mm, microelectrode arrays are inserted into the surface of the motor cortex – the part of the brain responsible for movement. Each array has 64 needle-like electrodes that record neural signals allowing patients to use a computer to select letters and ultimately spell words and sentences.

Recording of neural signals

Two microelectrode arrays record neural signals from the surface of the cortex. Each array is connected via implanted wires to a titanium pedestal mounted on the skull. Neural signals are transferred from the user to the signal processing computer via a cable.

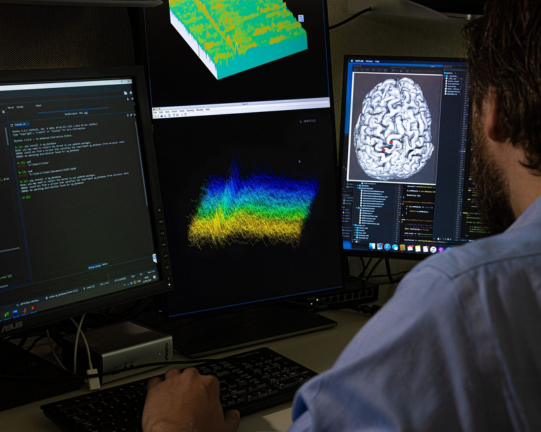

Real time processing of neural signals

The NeuroKey software gathers the neural data from the two microelectrode arrays and decodes and analyzes it in real time. NeuroKey receives individual signals from every channel representing the activity of individual neurons. It computes spike rate and signal power at every channel and, using a machine learning model, it decodes the user’s intention and sends a control signal to an auditory feedback speller. NeuroKey’s real-time signal processing and decoding capabilities allow the user to control the speller, fluently, without a delay.

Audio feedback and brain signal regulation

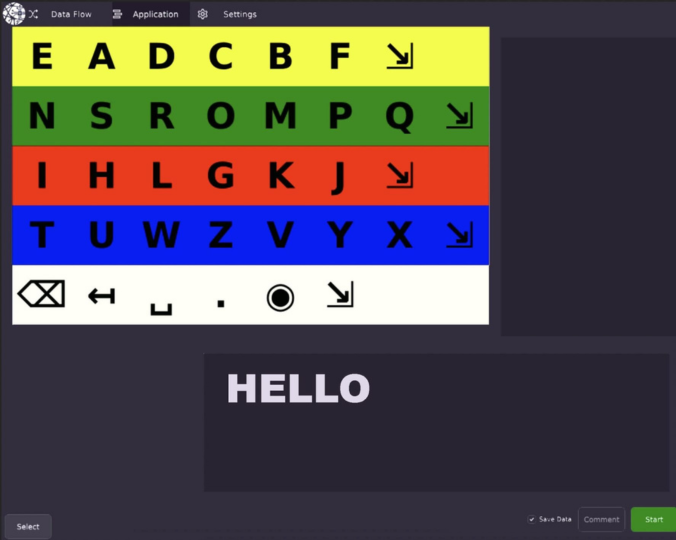

People with advanced ALS are not able to move and focus their eyes. For this reason, auditory rather than visual feedback is used. To communicate a word, the user must first spell each letter of that word. This is accomplished with a computer-generated voice that says each letter of the alphabet aloud followed by a tone played by the computer which represents the current state of the user’s brain signals. The user regulates their brain signals to alter the computer’s tone. A high tone indicates ‘yes, select this letter’, a low tone indicates ‘no, deselect this letter’. This is repeated until all the letters of a specific word have been identified and selected by the user. To avoid unnecessarily going through the whole alphabet to identify the correct letter, letters are distributed along a grid so that the computer (and user) first identifies the row where the required letter exists, speeding up the process of letter identification and selection.

Forming words and sentences

Each word is read aloud by a voice synthesizer, as soon it is formed by the user (through selection of individual letters). This computer-generated voice ultimately says the complete sentence. The NeuroKey team frequently refines the speller software to be able to integrate predictive text in a sentence, based on the user’s individual patterns of communication (e.g. bias towards the use of specific words) or the user’s personal and environmental circumstances (e.g. specific ways of addressing a family member). This allows for the process of communication between the user and computer to speed up over time.

NeuroKey software platform

Enabling real time brain-computer communication

NeuroKey is medical-grade software, suitable for use with implanted devices, and optimized for high channel count, high frequency data processing in real time. It allows the team to rapidly change how the data is processed through its flexible and modular programming interface. The software also has a simple user-interface that allows the family or caregivers to easily launch apps for communication, such as a speller or a quick Yes/No question app, and to recalibrate the system when needed.

The project is laying the foundations for brain-to-computer speech and other assistive tools – for example emergency alarms – for people who cannot ask for help in another way.

The system in numbers

Advances in algorithm development and a fully implantable brain-computer interface

The results of the project guide the development of future assistive communication tools. Such tools are key for the CLIS ALS population, but also have potential to help people affected by other diseases that impact their ability to move or communicate including stroke, spinal cord injury, late-stage muscular sclerosis, late-stage Parkinson’s disease and severe cerebral palsy.

Our team is continuously working to improve the NeuroKey software. We are building increasingly accurate and robust brain signal decoders, improving the predictive text of the speller and exploring the integration of other assistive devices, including home automation systems.

We are also developing ABILITY, a fully implantable brain-computer interface for the restoration of movement and communication, to bring cutting-edge technology closer to safe, long-term daily use at home.

The leads and electrodes connected to this prototype ABILITY device are similar to those currently used in this project. In the future, the fully implantable, wireless ABILITY device will replace the cable that connects the electrodes to the computer.