Completely locked-in man uses brain-computer interface to communicate

Two-year study paves the way for new technologies for people with severe paralysis

Geneva, Switzerland – Researchers at the Wyss Center for Bio and Neuroengineering, in collaboration with the University of Tübingen in Germany, have enabled a person with complete paralysis, who cannot speak, to communicate via an implanted brain-computer interface (BCI). The clinical case study has been ongoing for more than two years with the participant who has advanced amyotrophic lateral sclerosis (ALS) – a progressive neurodegenerative disease in which people lose the ability to move and talk. The results show that communication is possible with people who are completely locked-in because of ALS. The study is published today in Nature Communications.

Globally, the number of people with ALS is increasing and more than 300 000 people are projected to be living with the disease by 2040, with many of them reaching a state where speech is no longer possible. With further development, the approach described in this study could enable more people with advanced ALS to maintain communication.

“This study answers a long-standing question about whether people with complete locked-in syndrome (CLIS) – who have lost all voluntary muscle control, including movement of the eyes or mouth – also lose the ability of their brain to generate commands for communication,” said Jonas Zimmermann, PhD, Senior Neuroscientist at the Wyss Center in Geneva. “Successful communication has previously been demonstrated with BCIs in individuals with paralysis. But, to our knowledge, ours is the first study to achieve communication by someone who has no remaining voluntary movement and hence for whom the BCI is now the sole means of communication.”

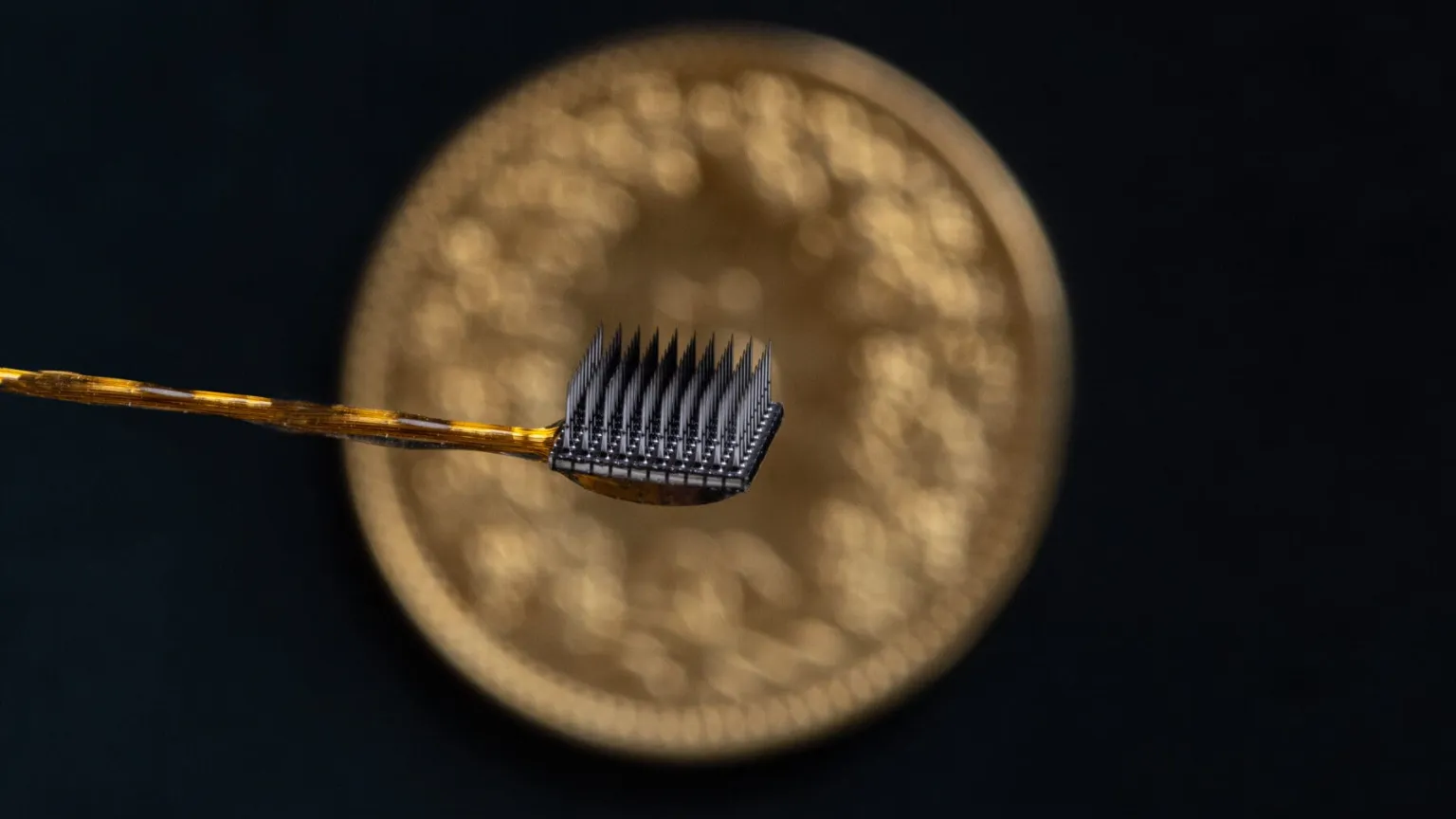

The study participant is a man in his 30s who has been diagnosed with a fast-progressing form of ALS. He has two intracortical microelectrode arrays surgically implanted in his motor cortex.

A communications platform for people with complete locked-in syndrome

The system, developed for people with complete locked-in syndrome, allows communication over periods of months to years, in the home environment. It paves the way for new technologies for people with severe paralysis.

Two microelectrode arrays, each 3.2 mm square, were inserted into the surface of the motor cortex – the part of the brain responsible for movement. Each array has 64 needle-like electrodes that record neural signals.

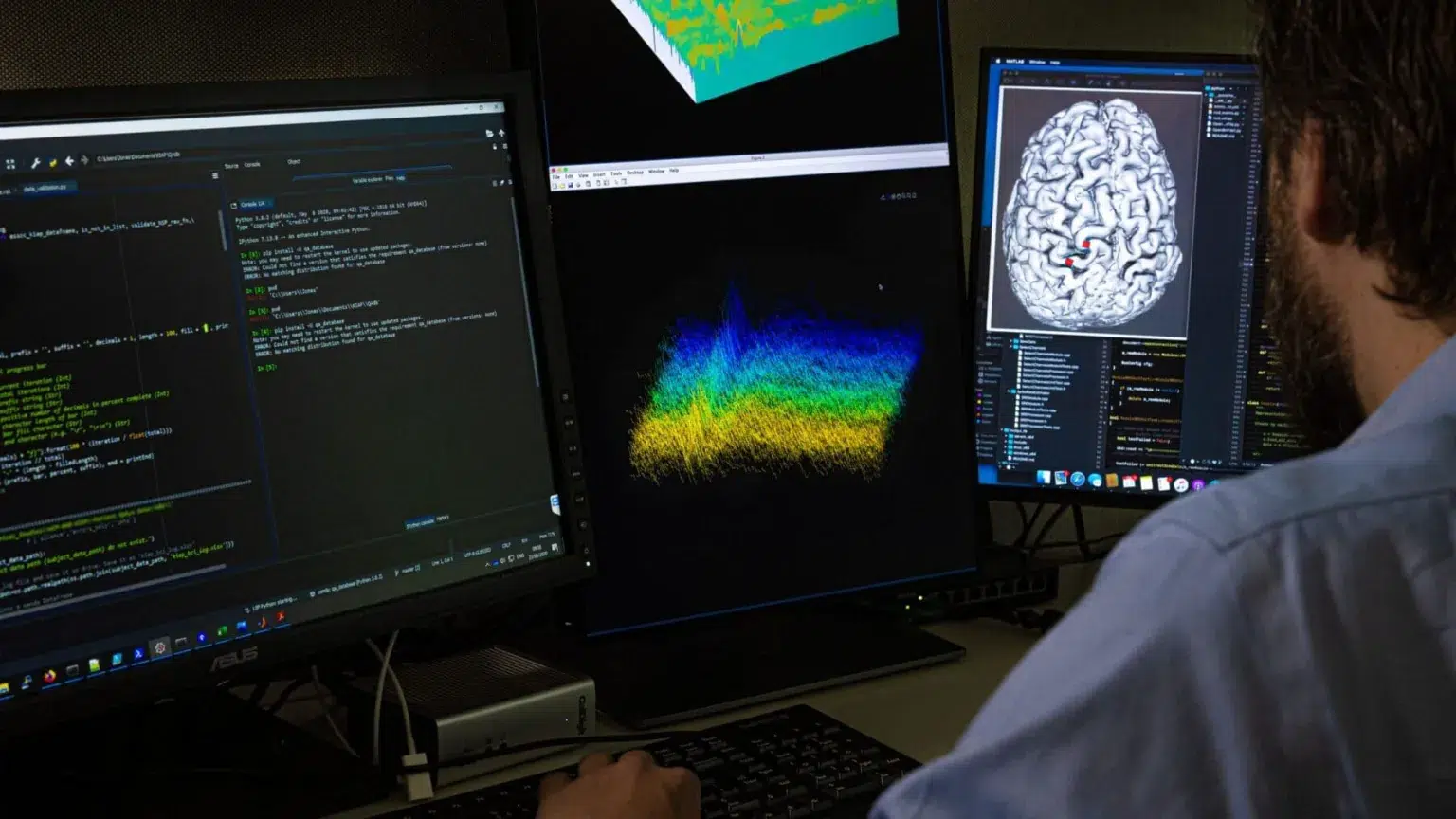

The participant, who lives at home with his family, has learned to generate brain activity by attempting different movements. These brain signals are picked up by the implanted microelectrodes and are decoded by a machine learning model in real time. The model maps the signals to mean either ‘yes’ or ‘no’. To reveal what the participant wants to communicate, a speller program reads the letters of the alphabet aloud. Using auditory neurofeedback, the participant is able to choose ‘yes’ or ‘no’ to confirm or reject the letter, ultimately forming whole words and sentences.

“This study has also demonstrated that, with the involvement of family or care givers, the system can in principle be used at home. This is an important step for people living with ALS who are being cared for outside the hospital environment,” said Wyss Center CTO George Kouvas, MBA “This technology, benefiting a patient and his family in their own environment, is a great example of how technological advances in the BCI field can be translated to create direct impact.”

Future improvement of the system could be key for the CLIS ALS population and could one day help other people who have impaired ability to communicate and move.

The team is also working on ABILITY, a wireless implantable BCI device designed to flexibly connect to either microelectrode arrays or ECoG electrode grids. This will allow detection and processing of signals from either highly specific or larger areas of the brain. The approach could enable speech decoding directly from the brain during imagined speech leading to more natural communication.

The system used in this study is not available outside of clinical research investigations.

‘Spelling Interface using Intracortical Signals in a Completely Locked-In Patient enabled via Auditory Neurofeedback Training’ is published in Nature Communications.

The data upon which the findings are based are available at:

https://doi.gin.g-node.org/10.12751/g-node.jdwmjd/

The underlying code for the BCI is available at:

https://doi.org/10.12751/g-node.ihc6qn

Neural data are decoded and analyzed in real time to control the speller software.

Find out more about creating a communications platform for people with complete locked-in syndrome.

Selected media coverage

LISTEN: Olivier Coquoz, Wyss Center COO, discusses the project on CQFD, the Swiss national radio science show (interview in French. Transcript in English).

WATCH: George Kouvas, Wyss Center CTO, discusses the project on Greek TV ERT3 (interview in Greek).