Neuroethics: Neurotech experts call for new measures to ensure brain-controlled devices are beneficial and safe

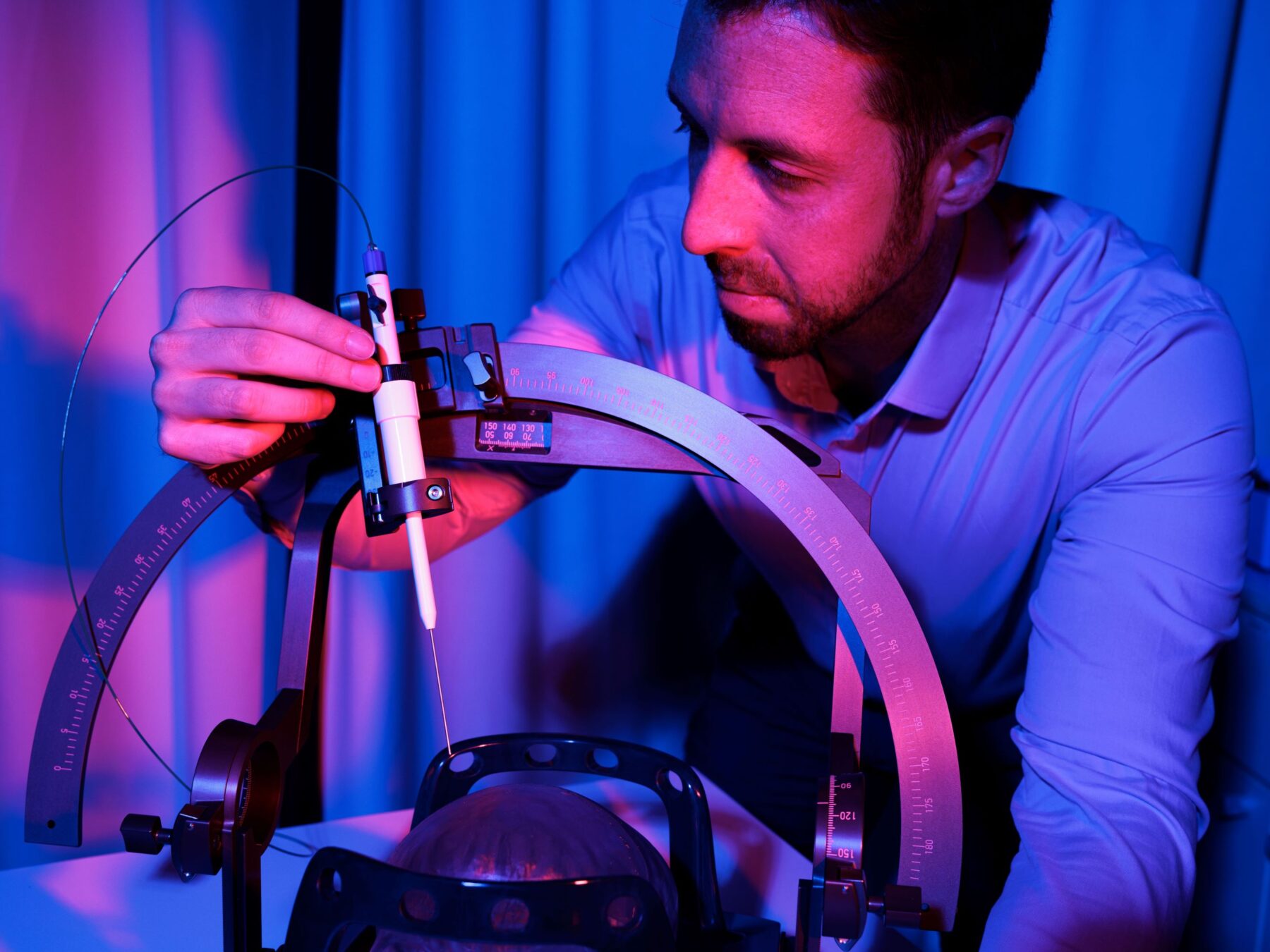

As brain-controlled robots enter everyday life, an article published in Science states that now is the time to take action and put in place guidelines that ensure the safe and beneficial use of direct brain-machine interaction.

Accountability, responsibility, privacy and security are all key when considering ethical dimensions of this emerging field.

If a semi-autonomous robot did not have a reliable control or override mechanism, a person might be considered negligent if they used it to pick up a baby, but not for other less risky activities. The authors propose that any semi-autonomous system should include a form of veto control – an emergency stop – to help overcome some of the inherent weaknesses of direct brain-machine interaction.

Professor John Donoghue, Director of the Wyss Center for Bio and Neuroengineering in Geneva, Switzerland, and co-author of the paper, said: “Although we still don’t fully understand how the brain works, we are moving closer to being able to reliably decode certain brain signals. We shouldn’t be complacent about what this could mean for society. We must carefully consider the consequences of living alongside semi-intelligent brain-controlled machines and we should be ready with mechanisms to ensure their safe and ethical use.”

“We don’t want to overstate the risks nor build false hope for those who could benefit from neurotechnology. Our aim is to ensure that appropriate legislation keeps pace with this rapidly progressing field.”

Protecting biological data recorded by brain-machine interfaces (BMIs) is another area of concern. Security solutions should include data encryption, information hiding and network security. Guidelines for patient data protection already exist for clinical studies but these standards differ across countries and may not apply as rigorously to purely human laboratory research.

Professor Niels Birbaumer, Senior Research Fellow at the Wyss Center in Geneva (formerly at University of Tübingen, Germany), and co-author of the paper, said: “The protection of sensitive neuronal data from people with complete paralysis who use a BMI as their only means of communication, is particularly important. Successful calibration of their BMI depends on brain responses to personal questions provided by the family (for example, “Your daughter’s name is Emily?”). Strict data protection must be applied to all people involved, this includes protecting the personal information asked in questions as well as the protection of neuronal data to ensure the device functions correctly.”

The possibility of ‘brainjacking’ – the malicious manipulation of brain implants – is a serious consideration say the authors. While BMI systems to restore movement or communication to paralysed people do not initially seem an appealing target, this could depend on the status of the user – a paralysed politician, for example, might be at increased risk of a malicious attack as brain readout improves.

The article, Help, hope and hype: ethical dimensions of neuroprosthetics by Jens Clausen, Eberhard Fetz, John Donoghue, Junichi Ushiba, Ulrike Spörhase, Jennifer Chandler, Niels Birbaumer and Surjo R. Soekadar is published in Science.